Commerce Grid Reporting API

The Commerce Grid Reporting API is a RESTful service that gives programmatic access to detailed reporting data from our internal uSlicer platform. It’s built as a replacement for the older PMC API, with a focus on simplifying how clients interact with reporting endpoints.

CGrid API acts as a proxy to the data managed by uSlicer, but it introduces a fresh structure — with its own Request and Response formats — to make things cleaner and more consistent moving forward.

If you’re already using the PMC API, this is the next step. The goal is to make the transition smooth while giving you access to the same reporting power with a more modern approach.

Authentication

Access to the Commerce Grid API is secured using OAuth 2.0, managed through the CGrid IAM service.

Before making any requests to the API, your application needs to obtain an access token. This token must be included in the Authorization header of each request.

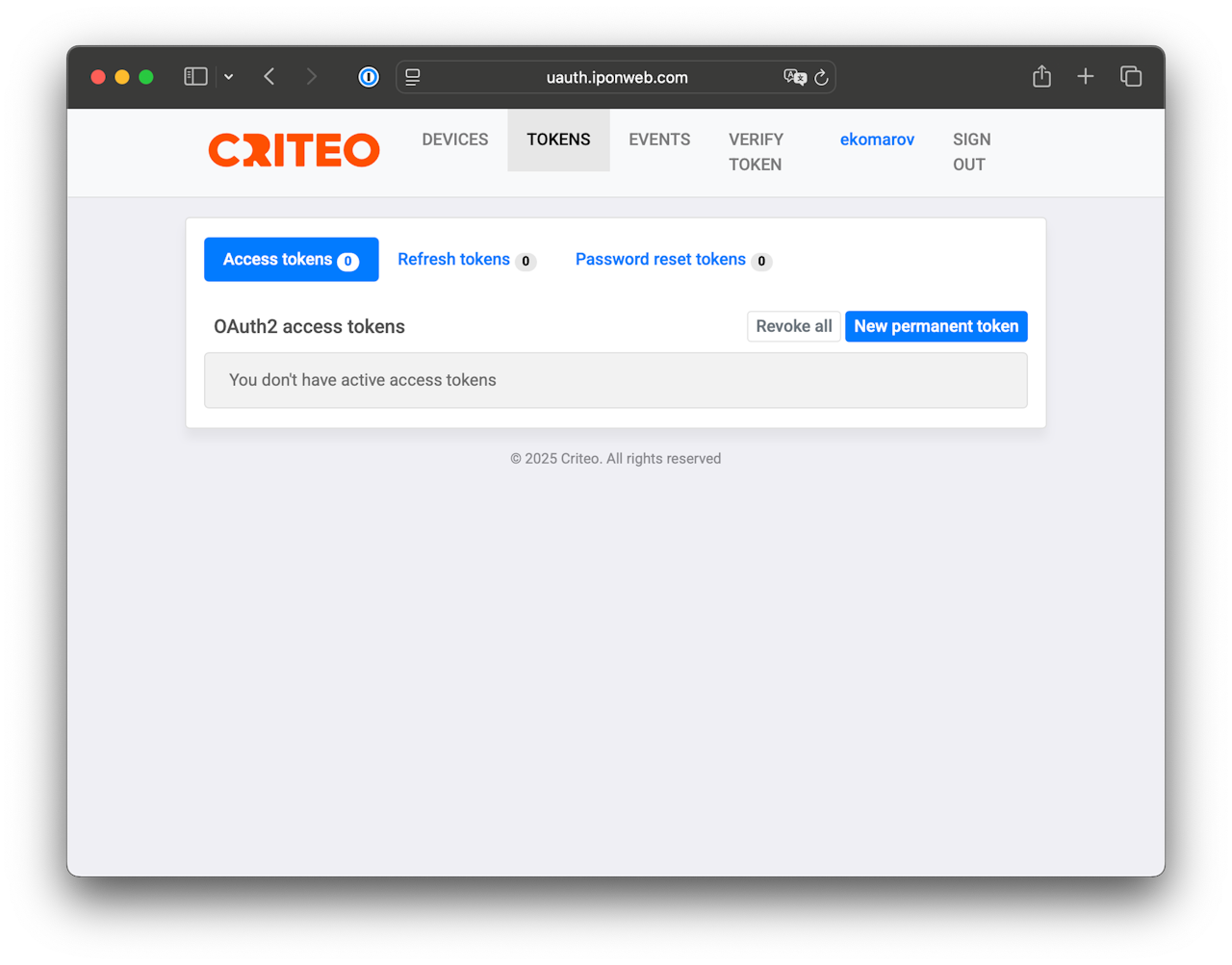

Authentication: Login & Check Tokens Section

To get a token, first log-in to u-Auth (https://uauth.iponweb.com/uauth/settings/#access) with your Commerce Grid account credentials.

After logging in, go to the “Tokens” section. If this is your first time getting a token, the list will be empty.

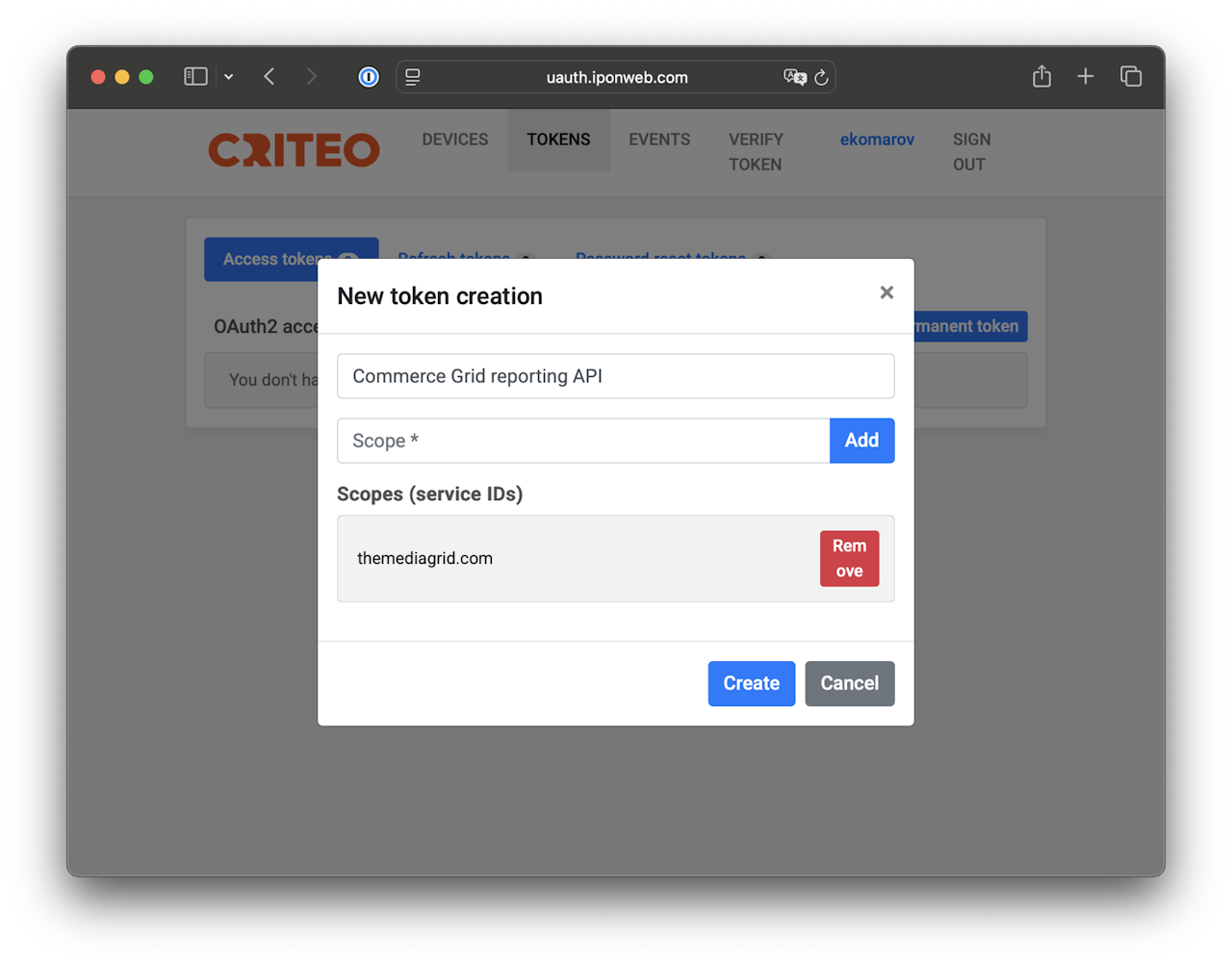

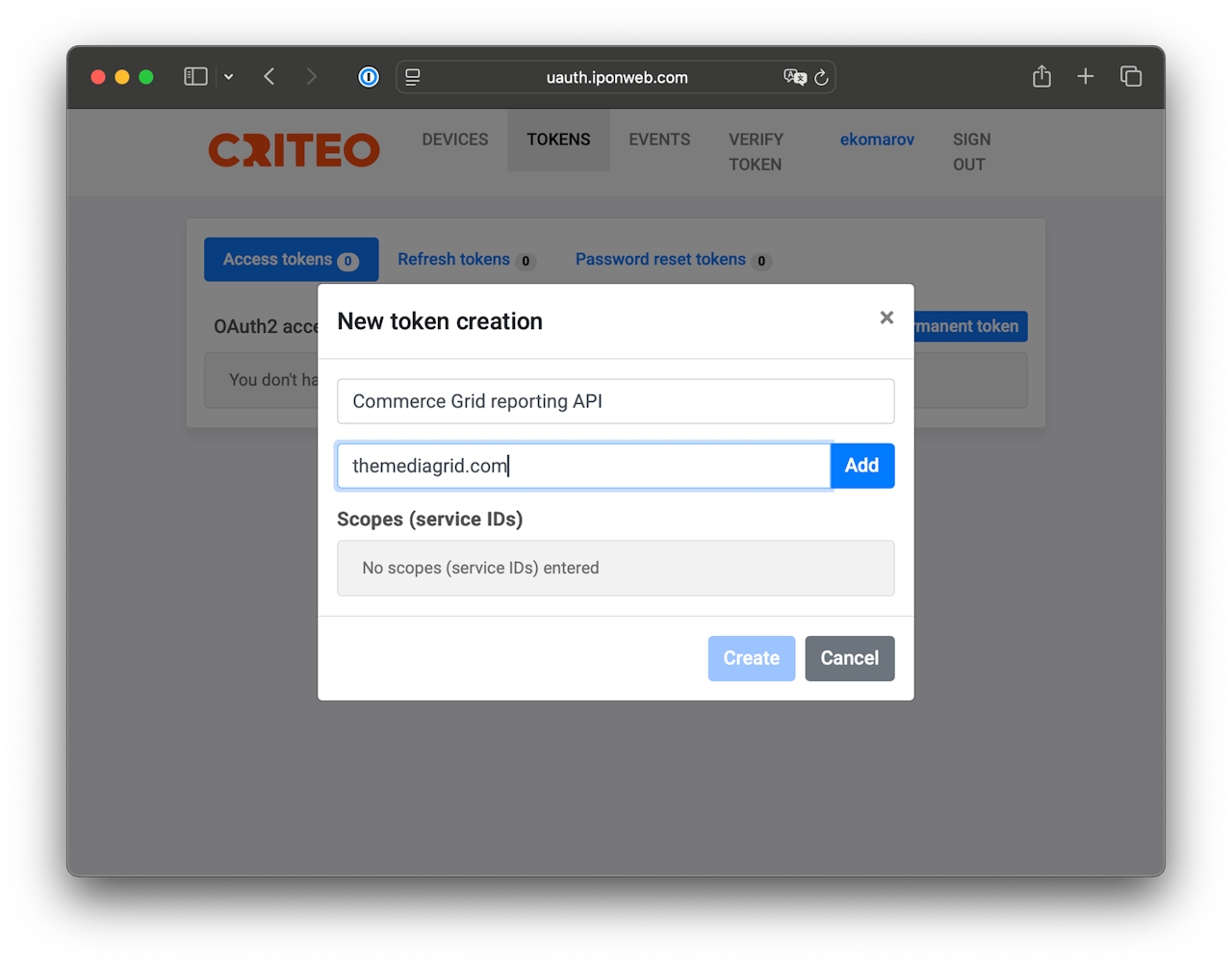

Authentication: Add A New Token

To start a new token process, click “New Permanent Token” button on the right.

You can change the autogenerated token name to something more meaningful, for example, “Commerce Grid Reporting API”.

Type “themediagrid.com” in the "Scope" field and then press the “Add” button on the right.

Once all fields have been updated, click "Create" to create the new token.

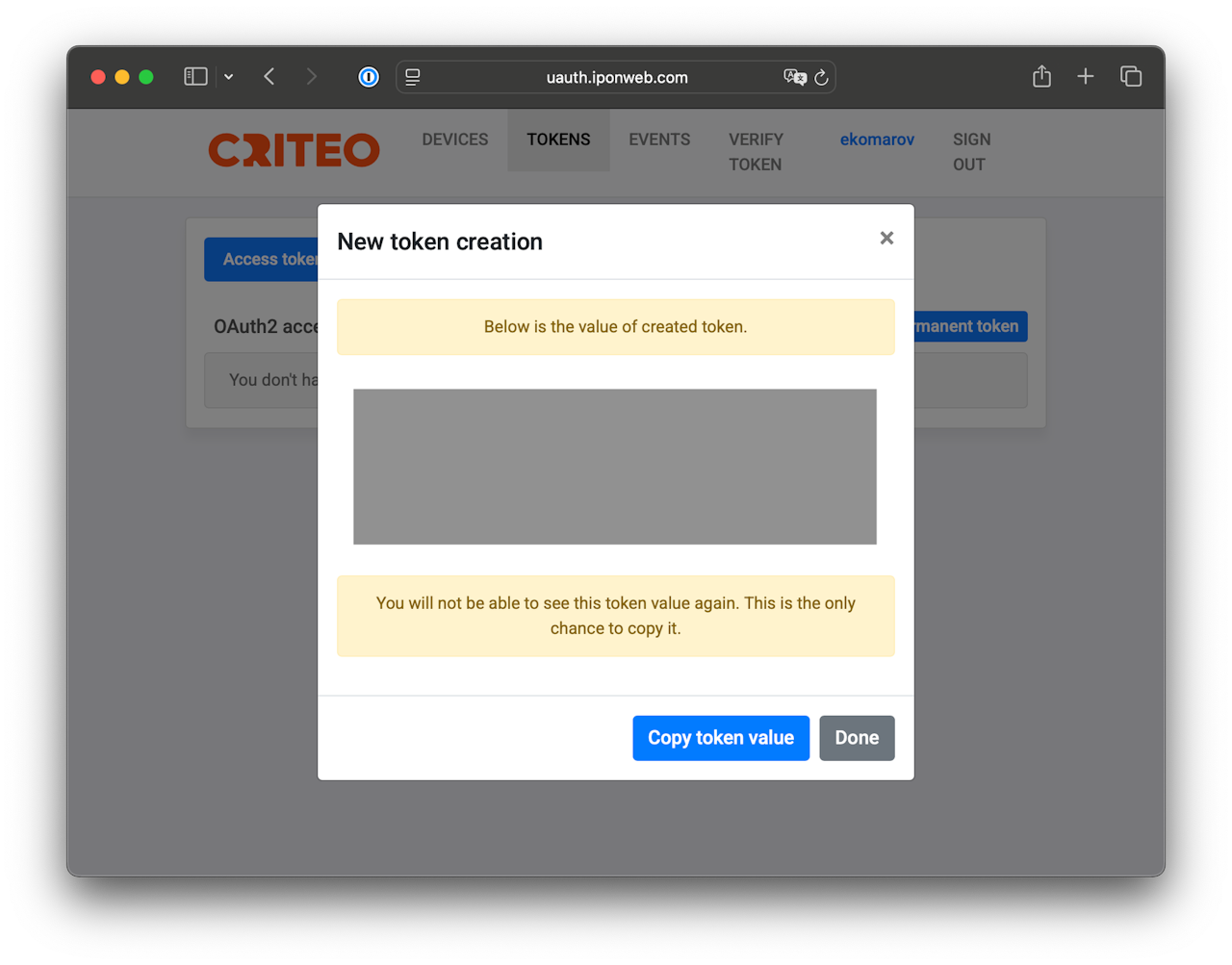

Authentication: Receive Your Token & Save It

Following the "New Permanent Token" process, you will receive the token in the next window that appears. (In the associated screenshot to the left, a grey box is indicated; your token will appear where this grey box is located.)

Press “Copy token value” and save it somewhere secure and accessible. The token value will only appear here once; if you lose your token or otherwise exit the window before you have saved it, you can start the process over again to access a new replacement token.

Once done, press “Done” to return to token list.

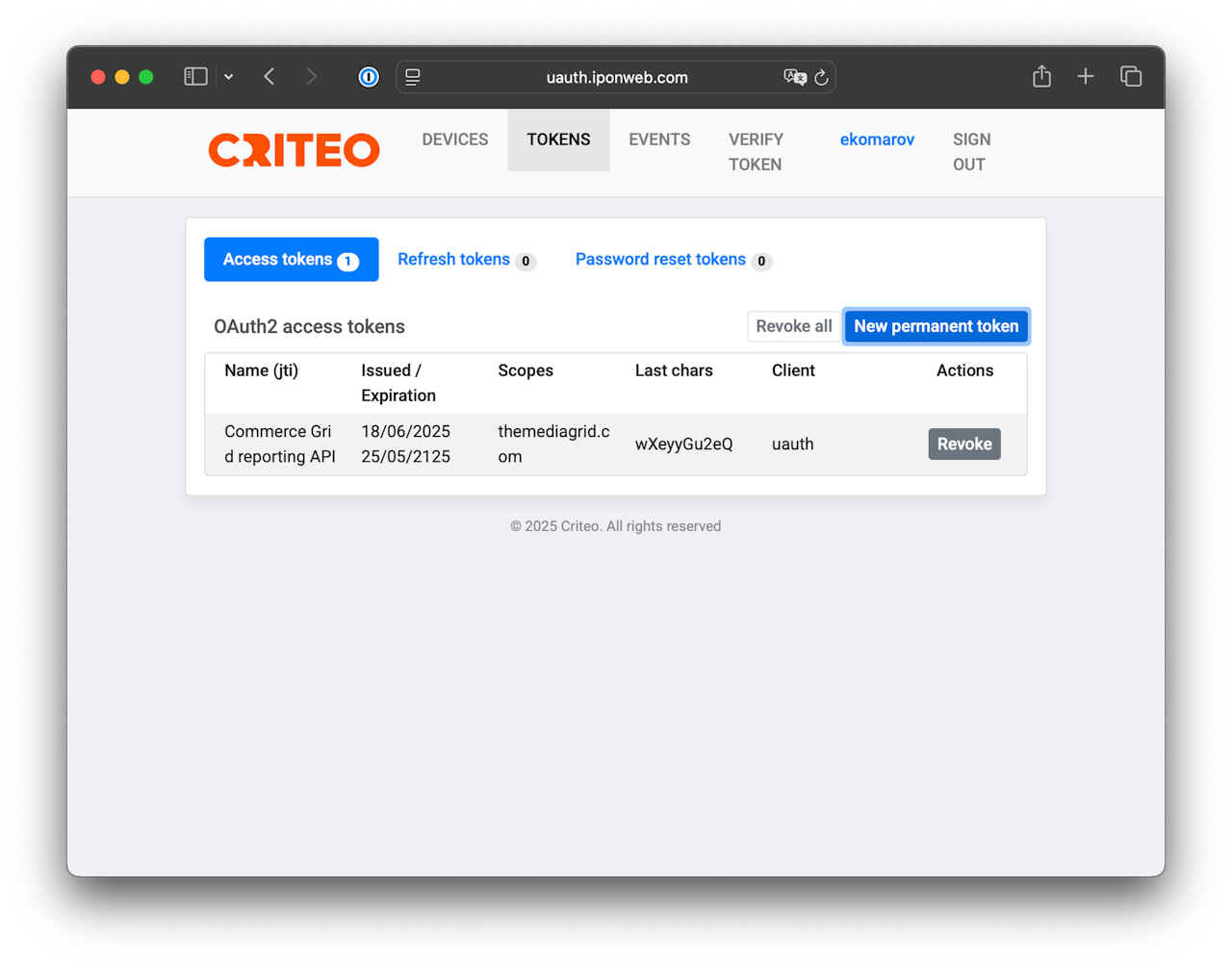

Authentication: Revoking A Token

Should you need to revoke a token you have created, select the “Revoke” button on the right side or “Revoke All” above the token list:

Getting Started: Querying the API

Base URL

https://commercegrid.criteo.com/api/uslicer/reporting/

Required Headers

Make sure to include the following headers in every request:

Header | Value | Description |

|---|---|---|

|

| OAuth 2.0 access token |

|

| Request body format |

|

| Response format |

Here’s a simple example using curl:

curl -X POST https://pub.themediagrid.com/api/uslicer/reporting/ \

-H "Authorization: Bearer YOUR_ACCESS_TOKEN" \

-H "Content-Type: application/json" \

-H "Accept: application/json"

-d '{

"start_date": "2025-05-15",

"end_date": "2025-05-22",

"split_by": [

"granularity_day"

],

"timezone": 0,

"order_by": [

{

"name": "granularity_day",

"direction": "ASC"

}

],

"add_keys": [

"granularity_hour"

],

"data_fields": [

"pub_payout"

],

"limit": 100,

"offset": 0,

"include_others": true

}'{

"status": "success",

"uslicer-spark.version": "4.19.0",

"rows": [

{

"data": [

{

"name": "pub_payout",

"value": 7095.69,

"percent": "24.52106"

}

],

"name": "2025-05-15",

"confidence_range": null,

"mapping": null

},

{

"data": [

{

"name": "pub_payout",

"value": 7225.84,

"percent": "24.97080"

}

],

"name": "2025-05-16",

"confidence_range": null,

"mapping": null

},

{

"data": [

{

"name": "pub_payout",

"value": 8233.03,

"percent": "28.45142"

}

],

"name": "2025-05-17",

"confidence_range": null,

"mapping": null

},

{

"data": [

{

"name": "pub_payout",

"value": 6382.58,

"percent": "22.05672"

}

],

"name": "2025-05-18",

"confidence_range": null,

"mapping": null

}

],

"total": {

"data": [

{

"name": "pub_payout",

"value": 28937.15

}

],

"dates": [

"2025-05-15",

"2025-05-16",

"2025-05-17",

"2025-05-18"

],

"records_found": 4,

"confidence_range": null

},

"others": {

"data": [

{

"name": "pub_payout",

"value": 0,

"percent": "0.00000"

}

],

"confidence_range": null,

"key_count": 0

}

}